Such an amazing journey! We have a shorter semester and an ambitious vision of our project. At the end of the day, we have a nice job!

Thanks for following all of our processes and progress until now. We hope you enjoy it as we do.

We are packaging the whole project as deliverable to clients. The following is the record for lesson-learned and our footage in this journey.

Client Comment

Post Moterm

What we have developed

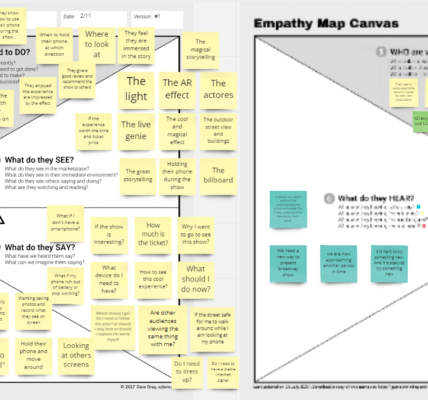

We spent the first two weeks working on research and benchmarking. Collecting various use cases and surveying different technologies, we came up with a bag of ideas of what trick we can play with and what technology we need to implement for the trick. After clients announced the IP(Aladdin) and the scripts were given, we started to design the detailed effects line by line.

What went well

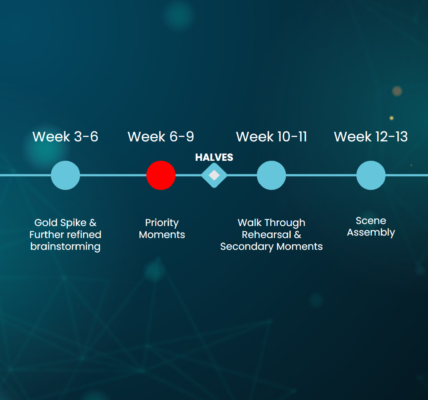

We have a good plan/schedule at the beginning. Thanks to Stephanie(Client) providing an original version of the working schedule, it helps us quickly modify a better and feasible version for the team based on her knowledge and experience.

Benefited by divide and conquer, our teamwork is smooth and efficient. Our team consists of three programmers and two artists. According to everyone’s preference, we decided to make one programmer a full-time producer, and the other two programmers split the tasks by “Digital and Practical.” Since one of the programmers was remotely working for this semester, she took responsibility for AR effects(Digital). Therefore, the other programmer took charge of IoT development(Practical), which required developers to work on-site sometimes. One of the artists chose to focus on 3D models and animation, and another one worked on the 2D design and user experience research. Balanced workloads help us keep moving forward and buy us more buffer for stocks of bottlenecks.

We have some successful moments in the show for the production, such as Column A&B, Genie Entrance, Genie Graffiti, etc.

[Google Cloud Anchor]

Google Cloud anchor is the cornerstone for our show since it helps us to position everything in the AR space relative to a single point in real life. We had a rough time dealing with their SDK since there was little documentation for installation and implementation. Reaching out to the expert is always a good way, so we met with some people who are familiar with AR Core and discussed how to achieve better performance when using Google’s cloud anchor.

[Venue Setup]

In the beginning, our programmer worked on Arduino to operate the hardware and tested it in his place. When we moved into Randy Pausch Interdisciplinary Studio, everything went to the next level. Since there is a lot of show-ready equipment like surrounded speakers and stage lighting, we saved a lot of time from building the venue from scratch. Thanks to David Purta and all of the members of the ETC support team, their assistance led us to focus on software development. Using Unity to send DMX signals to trigger all equipment in the auditorium, we feel like a conductor leading an orchestra. This change brought the audience to a more immersive experience and made the show a complete production.

[Animation]

From the original design, we noticed that our show needs many animations to make our experience alive, while there is only one 3D artist in the team. Therefore, we decided to buy some existing models, so our artist could save his time and focus on animation. Our artist works closely with actors to represent their image. Sometimes, our artist reviewed their acting video clips repeatedly to mimic their body language and facial expressions. Sometimes, he listened to soundtracks carefully to fine-tune animations and match timing. In addition, he spent a lot of time modifying the models and animation to avoid any inappropriate representation of the actors.

[Interaction between Actors and Digital Assets]

According to the feedback from the quarter walk-around and half presentation, it is always a concern to make actors involved interact with virtual effects. We can choose at least two different technical ways—tracking the actor’s motion for generating AR extensions or locating AR effects for the actor’s blocking plan. Considering the real-time motion capture is high performance-demanding for the user’s phone, we chose the second one as our solution. With the precise locating and timing control, as we mentioned above, the AR effects/prompts will always show up at the right place simultaneously, so actors can rehearse again and again until they act as naturally/smoothly as possible.

[UX Design and Indirect Control]

In the AR world, it is not easy to design UI for direction but not break the continuity of immersive experience. We chose to keep it as simple as possible and use indirect control from the acting side, like gestures or eye contact. For example, when the show starts, we use vibration to notify the audience. For another scenario, when the audience needs to spin around to see the AR Graffiti in the wall, we decided to use the actor’s gesture(pointing at) and light-ball-like particle system(flying to) rather than the virtual arrows or word instructions.

What could have been better

[Scrum and Task Tracking]

Learning from the bi-weekly producer round table, such a treasure ETC’s resource, we tried to use “Scrum” to manage our schedule and daily tasks. However, it’s hard to split tasks step by step, and it’s not straightforward for teammates to check out. We kept using Scrum for task-tracking until half presentation, and we realized it’s not a good way for us. Later, we changed to track our schedule based on user flow and story map. We used a traditional but efficient method for daily tasks, meeting notes, to check the to-do list.

Considering agile development methodology, we didn’t use it well to monitor our daily work, and it caused us to lose the chance to improve our process and efficiency. There are several ways we could improve next time. First, we could use other’s productive online tools like Trello or Miro for task tracking parallelly. They are much easier/straightforward for team members to update, then the producer could synchronize it to the Scrum board. Second, to break down the tasks into smaller ones, it’s better to clarify who is the task owner first. Using the “Responsive Matrix” could help teams assign tasks to each team member by different layers(Owner, Design, Execute, etc.) A Task owner knows the job best and takes ownership of this task, so the task owner is the best one to help producers break down the task and fill it into the Scrum board.

[Outdoor Testing]

One of the concerns for our project: how do we build an outdoor venue?

Daylight, weather, noises, and so on. There are so many uncontrollable factors we have to deal with if we build an outdoor venue and playtest outdoors. Therefore, we started with an indoor venue to avoid daily teardowns and to reduce difficulties to playtest. We moved everything outdoors for the playtest at the end of this semester. However, there are few unexpected challenges for moving outdoors. First, it takes a lot of effort and time to prepare. Before the outdoor playtest, we spent a few days learning and testing the setup process for portable speakers, fog machines and lighting separately. On the day we moved outside, it took over an hour to move out and half an hour to move in, but we only did playtest and record for half an hour. Second, outdoor playtests are highly dependent on weather conditions. Pittsburgh rains and snows a lot during the spring semester. For safety, there’s no way to expose the equipment under wet conditions. Also, the sunset happens later than 7:00 pm after daylight savings time starts, so it’s controversial to playtest at night time, but we are only allowed to access the building before 7:00 pm. With strong daylight, our lighting system is not bright enough to see.

The above challenges make our outdoor playtest don’t work well, but it helps us better understand and expect the final delivery to New York City. We also learned that some AR effects work better when installed in the open world, like Genie flying up to the sky or the graffiti posting on the brick wall. In addition, we noticed the poor wifi connection would cause noticeable latency of the show, so we informed our clients to be well-prepared when they rebuild the show in Shubert Alley.

Lessons Learned

[In experience design, it doesn’t have to work – it just has to look like it works]

Considering we are developing an experience rather than a game, it doesn’t have to work; it just has to look like it works. For example, in the beginning, we try to use GPS locating, Openpose, or other AI/real-time recognizing technology to implement the tracking feature. Later, we figured out it’s hard to detect actors’ motion and respawn AR effects accordingly and unnecessary. The alternative solution is just showing AR effects precisely on the stage to mimic the live interaction between actors and digital assets. We can use the blocking plan to mark the labels for each AR effect, and actors could know precisely when and where the AR effects happen during the show. Don’t over-reliance on technology and forget that people are an essential factor to the experience as well.

[Know your scope – don’t overpromise and underdeliver]

Our clients provided us a grand vision at the beginning of this semester. We had a hard time scope it down but eventually landed on the scope within what we could accomplish in a semester so that no one will be disappointed.

To scope down, MVP(Minimum Viable Product) is always an excellent method to help teams refine the scope. For example, in the beginning, we got many concerns about including live actors, directing, outdoor testing, and completing the entire show. Using MVP to restructure the development process, we decided to use ourselves as actors until delivery to clients in New York and worked on the indoor venue first. Also, we prioritized the show by scenes and lines, only focusing on the show’s primary moments.

[Immersion is hard to capture and easy to break]

Immersion is hard to capture and easy to break. We have to make sure all of our animations and assets are internally consistent with the feeling of the show so that the guests could be immersed the entire time. The above paragraph [Animation] and [UX Design and Indirect Control] have mentioned how we build an immersive feeling for the audience.

Future

[Delivery]

From June 4th to the 6th, we will travel to New York City and rebuild it at Shubert Alley for filming. Our clients will edit the recording and make a demo clip for show-off to investors and the audience. Some professional actors will participate in the show, Juwan Crawley as the Genie, and Nikhil Saboo as Aladdin. We are not worried about equipment if they have standard DMX input/output. The most challenging part is relocating everything in New York’s outdoor venue. It takes time to re-calibrate all AR effects location and orientation based on the environment in the real world.