| |

The

Earth Theater:

The Earth Theater at the Carnegie Museum of Natural History

is a Panoromic SkyVision Theater, a product of SkySkan, Inc.

The theater has a 40 foot diameter, a 210 degree horizontal

viewing angle, a 30 degree vertical viewing angle, and seats

an audience of 68. The theater's five digital light projectors,

slide projectors, lighting instuments, and 4.1 channel surround

sound are driven by SkySkan's Spice Multimedia Control System. The SkyVision system was specifically designed for the playback of pre-rendered full-dome & panoromic digital video. |

|

|

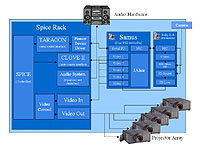

ETC System Diagram

Click to view |

ETC Panoromic Real-time Interaction System:

The Entertainment Technology Center's system consists

of two off-the-shelf 866Mhz Intel Pentium III Computers:

one for serial communication with Spice and rendering

graphics to all five projectors, and a second for sound

playback and interactive inputs. The rendering computer

employs five ATI Radeon

video cards on the PCI bus. The sound/interaction computer

uses a Creative

Labs Sound Blaster Live! card in order to create three-dimensional

spatialized audio. Audience interaction is done through

an active infra-red camera, coupled with an ImageNation

PXC200 frame grabber, and an omni-directional microphone

plugged into the SoundBlaster card.

The main software used on the project is Alice,

a 3D authoring environment developed by the Stage3

Research Group at Carnegie Mellon University. Alice

is designed for the rapid prototyping and development

of interactive 3D graphics. Alice was intended to be

used for material created for desktop computer and Head-Mounted

display use. The ETC Team and Stage3 collaborated to

adapt Alice for playback across multiple displays by

having each camera object in the virtual environment

output to a separate video card.

In order to create a seamless view across the five

projected images, the ETC Team employed an alpha-channel

gradient within the virtual world. This created issues

where one camera could see another camera's blending

object, so the visibility of the blending object was

tied to the refresh rate of the projectors.

Since such an immense computational load was placed

on a single computer, audio playback and processing

of audio and video interations were outsourced to a

separate machine. The remote sound and vision server

programs were written by the students on the project.

|

|

|

|

|